Exploring the Raspberry Pi Cluster

In the previous blog I described the initial steps to build and set up a Raspberry Pi cluster. With this initial setup in place, the next step is to connect the four Raspberry Pis together to form a Kubernetes cluster.

Creating a Kubernetes Cluster

As described in the Kubernetes documentation a Kubernetes cluster consists of the control plane and several workers nodes. The worker nodes execute the containerizes applications while the control plane is responsible for managing the worker nodes. The final configuration step in my Raspberry Pi cluster is to:

- Define which node acts as the control plane (Note: in a productive environment the control plane would also be running on several computers)

- Add the worker nodes to the control plane.

Note, that the following description is specific for MikroK8s. However, it should be easily adoptable to different Kubernetes variants.

Defining the control plane and adding worker nodes to the cluster is that last time requiring to log into the individual Raspberry Pis directly. Once the cluster is set up all interactions with the cluster are performed using the kubectl, the Kubernetes CLI.

Adding Worker Node

In my cluster the Raspberry Pi with the host name pi-picard is obviously going to run the

control plane 🖖. To add worker nodes to a node it is necessary to log in using SSH

(e.g. ssh ubuntu@pi-picard). Once you are logged into the Raspberry Pi that is going to

be the control plane execute the following command (caveat: this is specific for MikroK8s):

microk8s add-nodeThe result of this command should be some joining instructions similar to the ones below:

From the node you wish to join to this cluster, run the following:

microk8s join 192.168.178.251:25000/b5379006e31afba0056940b2ceea37f3

If the node you are adding is not reachable through the default interface you can use one of the following:

microk8s join 192.168.178.251:25000/b5379006e31afba0056940b2ceea37f3

microk8s join 10.1.2.64:25000/b5379006e31afba0056940b2ceea37f3Therefore, the next step is to log into the worker nodes (pi-ricard, pi-data and pi-worf)

in my case and the and execute the join command. Once the worker nodes are joined to the cluster, kubnectl can

be used to show the nodes in the cluster.

In my cluster executing

microk8s kubectl get noresults in the following output:

NAME STATUS ROLES AGE VERSION

pi-data Ready <none> 30d v1.20.2-34+c6851e88267786

pi-riker Ready <none> 30d v1.20.1-34+97978f80232b01

pi-picard Ready <none> 30d v1.20.2-34+c6851e88267786

pi-worf Ready <none> 30d v1.20.2-34+c6851e88267786Furthermore, using microk8s status shows some additional information regarding the cluster:

microk8s is running

high-availability: yes

datastore master nodes: 192.168.178.251:19001 192.168.178.252:19001 192.168.178.253:19001

datastore standby nodes: 192.168.178.254:19001

addons:

enabled:

dashboard # The Kubernetes dashboard

dns # CoreDNS

ha-cluster # Configure high availability on the current node

metrics-server # K8s Metrics Server for API access to service metrics

disabled:

...This output shows that in my cluster the high availability is configured and the services dns,

dashboard and metrics-server are running. Disabling and enabling service can also be

performed using the microk8s CLI. See the MicroK8s documentation

for further details.

Configuring kubectl

From this point on it is possible to interact with the Raspberry Pi cluster using the Kubernetes

CLI kubectl. In order to do so, kubectl needs to know on which server the control plane is

running and what user credential to use to access the cluster. This information is stored in the

kubeconfig file. According to the

Kubernetes documentation

the following process is used by kubectl to find the kubeconfig:

By default, kubectl looks for a file named config in the $HOME/.kube directory. You can specify other kubeconfig files by setting the KUBECONFIG environment variable or by setting the --kubeconfig flag.

I chose to store the kubeconfig file for my cluster in a different location and set the

KUBECONFIG environment variable whenever I want to interact with the cluster.

export KUBECONFIG=~/Documents/development/rpi-cluster/kubeconfigCreating the kubeconfig File

The interesting question is which information kubeconfig file needs to contain. Again, there

are different approaches how to create a kubeconfig file. Especially, in more advanced scenarios

than the one I described here, there are lots of different

features available.

For my cluster I simply executed:

microk8s configin the control plane node. The output of this command should look something like this:

apiVersion: v1

clusters:

- cluster:

certificate-authority-data: LS0tLS1CRUdJT...

server: https://192.168.178.251:16443

name: microk8s-cluster

contexts:

- context:

cluster: microk8s-cluster

user: admin

name: microk8s

current-context: microk8s

kind: Config

preferences: {}

users:

- name: admin

user:

token: THNNNU...Simply store the output of the command on the computer that is used to access the cluster and configure

kubectl to use it.

Using kubectl

With the kubeconfig in place it is now possible to interact with the cluster using the

kubectl command.

Adding a kubectl Shortcut

To interact with the cluster the kubectl command will be used a lot! As it in

not exactly the shortest command to type it is useful to configure a shortcut for it. I simply added

the following alias to my (ohmyz.sh) shell config:

alias k='kubectl'With this alias in place I can now use k to invoke the kubectl command. For example executing

k -h results in the following output:

kubectl controls the Kubernetes cluster manager.

Find more information at: https://kubernetes.io/docs/reference/kubectl/overview/

Basic Commands (Beginner):

create Create a resource from a file or from stdin.

expose Take a replication controller, service, deployment or pod and expose it as a new Kubernetes Service

run Run a particular image on the cluster

set Set specific features on objects

Accessing the Kubernetes Dashboard

In order to explore the Kubernetes cluster a little and get familiar with kubectl, the next

step is to access the Kubernetes Dashboard. The Kubernetes Dashboard

is a web application that can be used to manage the cluster. Accessing the Kubernetes Dashboard requires

a few steps:

- Identifying the service name of the dashboard

- Configuring port forwarding from the client to the cluster

- Retrieving client secrets to access the dashboard.

Identifying the Service Name

Kubectl can be used to get the services running in the Kubernetes cluster. Executing

k get servicesresult in the following output in my cluster:

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

kubernetes ClusterIP 10.152.183.1 <none> 443/TCP 30dObviously, the output does not contain the dashboard service. The reason is, that the dashboard service is deployed in a

different namespace. Using k get namespace a list of all the namespaces in the cluster can be retrieved. In my cluster the

following namespaces are available:

NAME STATUS AGE

kube-system Active 30d

kube-public Active 30d

kube-node-lease Active 30d

default Active 30dIn MikroK8s the dashboard is deployed in the kube-system namespace. Executing

k get services -n kube-system returns the services running in this namespace. In my cluster the command returns the following list of services.

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

metrics-server ClusterIP 10.152.183.97 <none> 443/TCP 30d

kubernetes-dashboard ClusterIP 10.152.183.88 <none> 443/TCP 30d

dashboard-metrics-scraper ClusterIP 10.152.183.143 <none> 8000/TCP 30d

kube-dns ClusterIP 10.152.183.10 <none> 53/UDP,53/TCP,9153/TCP 29dConfiguring Port Forwarding

Once the exact name and namespace of a service is know it is possible to configure port forwarding to this

service. The kubnectl [documentation]/(https://kubernetes.io/docs/tasks/access-application-cluster/port-forward-access-application-cluster/) contains

a detailed example how to use port forwarding to access applications running in the cluster. To access the Kubernetes Dashboard a port of the local machine

needs to be forwarded to the appropriate service.

The following command forwards the local port 8080 to the port 443 of the service kubernetes-dashboard in the namespace kube-system.

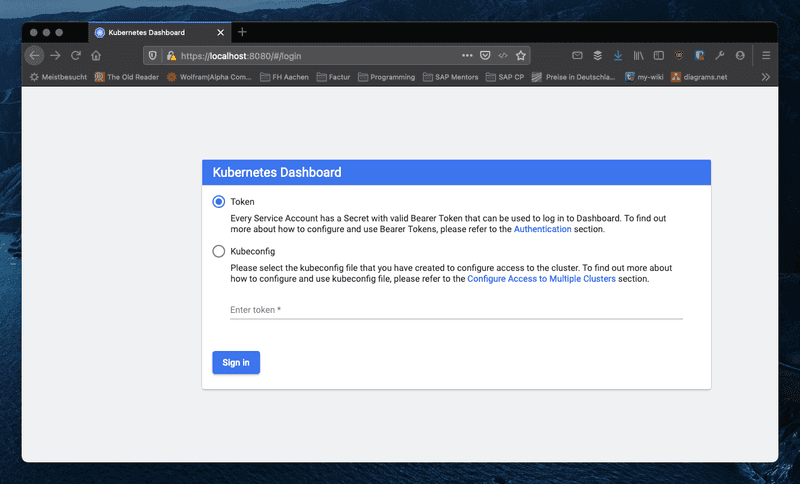

k port-forward -n kube-system service/kubernetes-dashboard 8080:443Once the port forwarding is running it is possible to access the Kubernetes Dashboard at the URL https://localhost:8080. Accessing this URL in a browser results in the login window shown below.

Login is possible by either selecting the kubeconfig file or providing a authentication token.

Retrieving Secrets to Access the Dashboard

In order to further explore the possibilities of kubectl I will use the authentication token to access the dashboard. In order to read the correct

authentication token it is first necessary to find out which tokens exist. The following command returns a list of all secrets in the namespace

kube-system.

kubectl -n kube-system get secretRunning this command returns a long list of available secrets. In my cluster the this is the first part of the returned list:

NAME TYPE DATA AGE

calico-node-token-spnt5 kubernetes.io/service-account-token 3 31d

resourcequota-controller-token-6tc67 kubernetes.io/service-account-token 3 31d

calico-kube-controllers-token-p8dlv kubernetes.io/service-account-token 3 31d

job-controller-token-lhkst kubernetes.io/service-account-token 3 31d

ttl-controller-token-m96b7 kubernetes.io/service-account-token 3 31d

attachdetach-controller-token-kw5fg kubernetes.io/service-account-token 3 31d

pod-garbage-collector-token-xrjns kubernetes.io/service-account-token 3 31d

disruption-controller-token-bv46c kubernetes.io/service-account-token 3 31dIn order to access the Kubernetes Dashboard a secret of type service-account-token is required. In my cluster the respective secret is called

kubernetes-dashboard-token-ccpfl. Using the following command this secret can be read:

k -n kube-system describe secret kubernetes-dashboard-token-ccpflExecuting the above command returns the following information:

Name: kubernetes-dashboard-token-ccpfl

Namespace: kube-system

Labels: <none>

Annotations: kubernetes.io/service-account.name: kubernetes-dashboard

kubernetes.io/service-account.uid: 11cca18d-288b-4166-b0fb-feb1d3e49053

Type: kubernetes.io/service-account-token

Data

====

ca.crt: 1103 bytes

namespace: 11 bytes

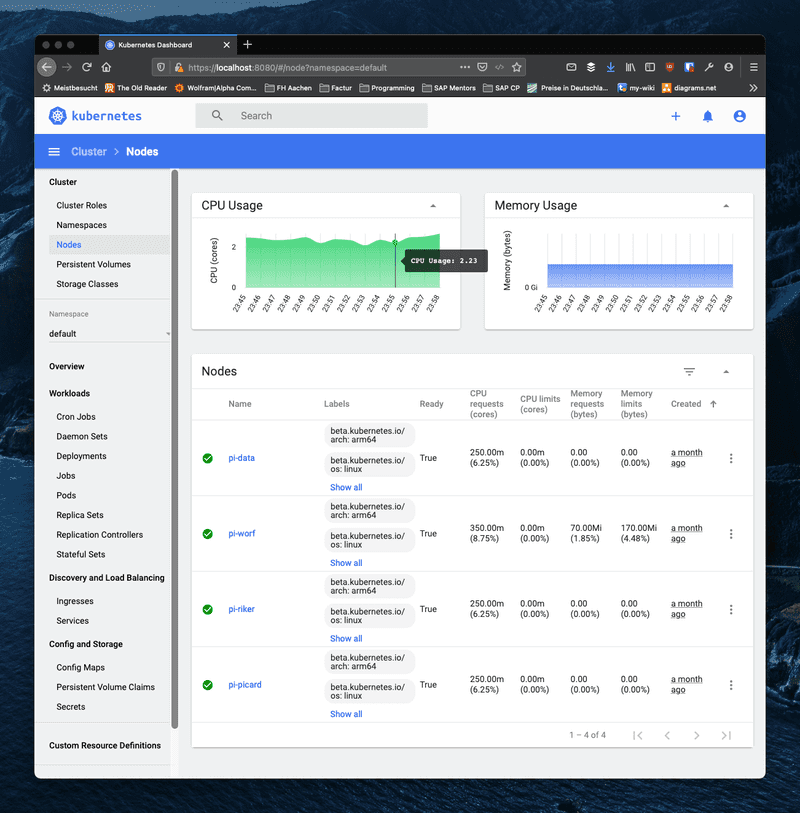

token: eyJhbGciOiJSUzI1NiIsImtpZCI6InBsUVlzU...Pasting the returned token into the login screen should provide access to the dashboard. The following screenshot shows the node overview of my cluster.

Outlook

Accessing the Kubernetes Dashboard concludes this blog. In the next blog I'll describe how to build a small Node.js application and deploy it into the cluster.

Christian